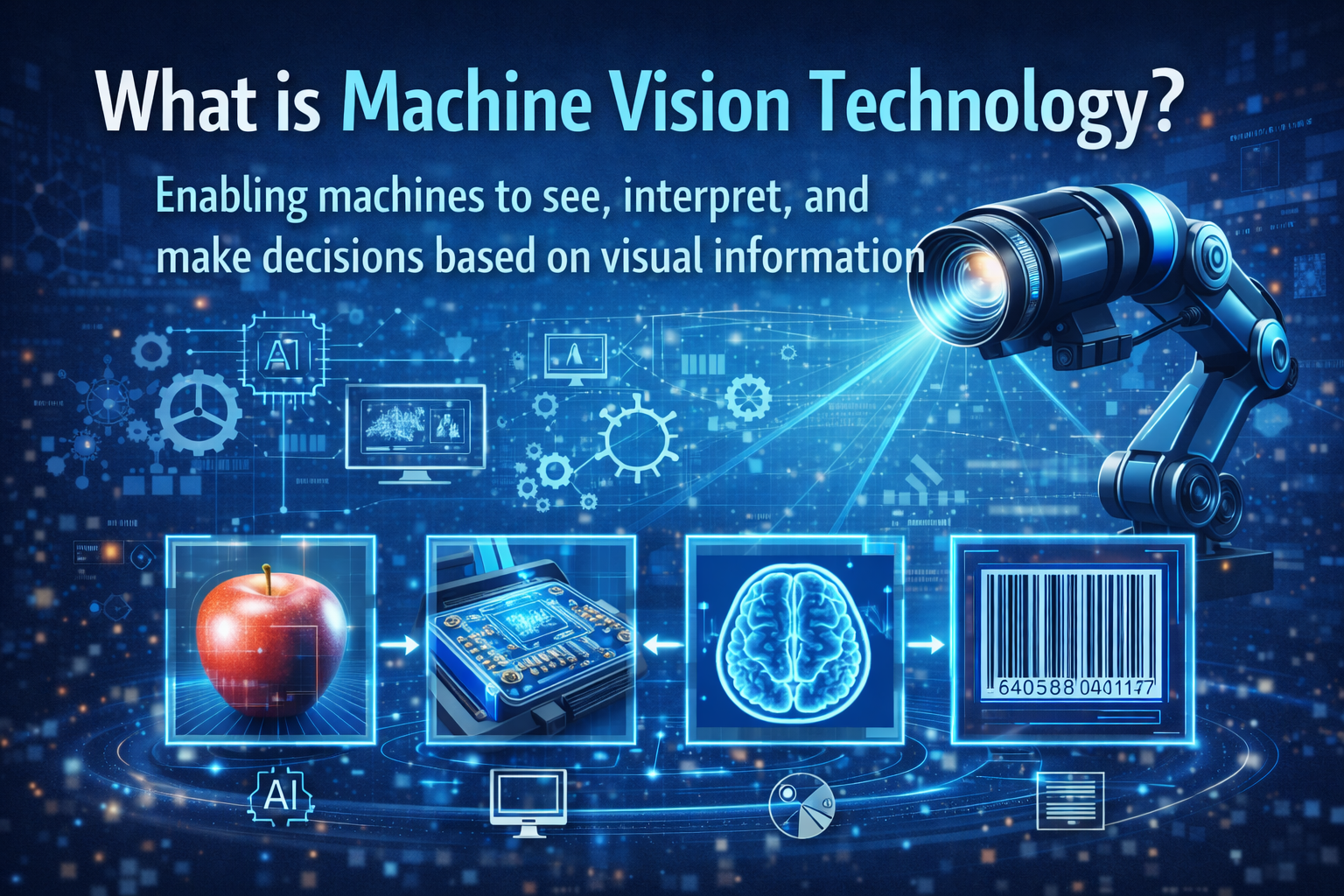

In an era where precision, speed, and unwavering quality are paramount, industrial automation has reached unprecedented levels. At the heart of this revolution lies what is machine vision technology – a fascinating and critical field that enables computers to “see” and interpret the world much like humans do, but with far greater consistency, speed, and objectivity. By replicating the human sense of sight, machine vision systems empower machines to perform complex inspection, guidance, identification, and measurement tasks, driving efficiency and innovation across countless industries. As we navigate 2026, understanding this technology is no longer optional; it’s essential for anyone looking to optimize processes, enhance product quality, and secure a competitive edge.

Key Takeaways

- Machine vision technology allows computers to “see” and interpret images for automation tasks, offering superior speed and accuracy compared to human inspection.

- It comprises interconnected components: cameras, optics, illumination, processors, and specialized software that work together to acquire and analyze visual data.

- Applications span across quality control, automated inspection, robot guidance, measurement, and identification in diverse sectors like manufacturing, automotive, and pharmaceuticals.

- The integration of AI, particularly deep learning, is rapidly transforming machine vision, enabling more adaptable, intelligent, and robust systems.

- Key benefits include enhanced product quality, increased production efficiency, reduced costs, and improved workplace safety.

Understanding What is Machine Vision Technology?

At its core, what is machine vision technology? It is a scientific field and technological domain concerned with equipping computers with the ability to “see” and understand images. Unlike human vision, which is subjective and prone to fatigue, machine vision systems provide objective, repeatable, and non-contact inspection and analysis. These systems are designed to perform tasks that require visual perception, translating light into digital data that can be processed and acted upon by other automated systems.

Imagine a production line where thousands of products zip by every minute. A human inspector would quickly tire and miss defects. A machine vision system, however, can meticulously examine each item, identify even minute flaws, verify assembly, read barcodes, and ensure everything meets stringent quality standards, all without a coffee break. This capability is what makes machine vision indispensable in modern industrial settings.

Distinguishing Machine Vision from Computer Vision and Image Processing

While often used interchangeably, it’s important to understand the subtle distinctions between machine vision, computer vision, and image processing:

- Image Processing: This is the foundational step. It involves manipulating raw image data to enhance it, filter noise, or extract specific features. Think of it as refining the raw input for better analysis.

- Computer Vision: A broader academic field that explores how computers can gain a high-level understanding from digital images or videos. Its goal is often to enable computers to perform complex cognitive tasks, such as recognizing faces, understanding scenes, or interpreting human behavior. It has applications beyond industrial automation, including self-driving cars and medical imaging.

- Machine Vision: This is the application-oriented subset of computer vision. It focuses on using these technologies in industrial and manufacturing environments to automate specific tasks, primarily for inspection, guidance, identification, and measurement. It’s about practical implementation for specific, repeatable industrial processes.

“Machine vision takes the theoretical power of computer vision and grounds it in the practical demands of the factory floor, delivering tangible benefits in quality and efficiency.”

Core Components of a Machine Vision System

A complete machine vision system is an intricate orchestration of hardware and software, each playing a vital role in enabling the system to “see” and interpret. Understanding these components is key to grasping what is machine vision technology.

1. Image Acquisition: The Eyes of the System 👀

This is where the visual data is captured. It involves:

- Cameras:

The primary device for converting light into an electrical signal. There’s a wide range of cameras:

- CCD (Charge-Coupled Device) Cameras: Traditional, known for high image quality and low noise.

- CMOS (Complementary Metal-Oxide Semiconductor) Cameras: More modern, faster, lower power consumption, and often more cost-effective. They are increasingly prevalent in 2026.

- Line Scan Cameras: Ideal for continuous web inspection (e.g., paper, fabric) or cylindrical objects, building an image one line at a time.

- Area Scan Cameras: Capture a 2D image of a defined area, suitable for inspecting stationary or slow-moving objects.

- 3D Cameras: Utilize technologies like structured light or time-of-flight to capture depth information, crucial for complex geometries and robot guidance.

- Optics (Lenses):

Crucial for focusing light onto the camera sensor. Lenses determine the Field of View (FOV), working distance, and image quality. Different lenses (e.g., telecentric, fixed focal length, zoom) are chosen based on the application’s specific requirements for magnification and distortion control.

- Illumination (Lighting):

Often considered the most critical component for a successful machine vision application. Proper lighting enhances contrast, highlights features, and minimizes shadows, making defects visible and simplifying image analysis. Types include:

- Bright Field: Direct light on the object.

- Dark Field: Light at a low angle to highlight surface irregularities.

- Backlight: Silhouettes the object, ideal for measuring dimensions or detecting foreign particles.

- Diffused Light: Provides uniform, shadow-free illumination for highly reflective surfaces.

- Structured Light: Projects a pattern (e.g., laser lines) onto an object to extract 3D information.

- Frame Grabber (or Vision Controller):

A piece of hardware that acts as an interface between the camera and the processing unit. It captures the raw image data from the camera and transfers it to the computer’s memory for processing. In modern digital cameras, this functionality is often integrated directly into the camera or the industrial PC.

2. Processing Unit: The Brain of the System 🧠

Once an image is acquired, it needs to be processed and analyzed. This is typically handled by:

- Industrial PCs (IPCs): Robust computers designed for harsh industrial environments, offering high processing power for complex vision tasks.

- Embedded Vision Systems: Compact, purpose-built processors integrated directly into the camera or a small controller. They are often more cost-effective and power-efficient for specific tasks.

- Smart Cameras: Cameras that have integrated processing capabilities, allowing them to perform image analysis directly without an external PC. This makes them highly compact and suitable for distributed applications.

- GPUs (Graphics Processing Units): Increasingly used, especially with AI and deep learning, to accelerate complex parallel computations required for advanced image analysis.

3. Software: The Intelligence and Algorithms 💻

The software is where the “seeing” becomes “understanding.” It encompasses:

- Image Processing Libraries: Collections of algorithms for tasks like filtering, enhancement, noise reduction, and geometric transformations.

- Vision Software Platforms: User-friendly interfaces (e.g., Cognex VisionPro, Halcon, LabVIEW IMAQ) that allow engineers to develop and deploy vision applications using pre-built tools for tasks like pattern matching, edge detection, optical character recognition (OCR), and color analysis.

- AI and Deep Learning Frameworks: Essential for complex, variable, or subjective inspection tasks. These frameworks allow systems to learn from large datasets of images, identifying defects or classifying objects with high accuracy, even when variations are present.

4. Output and Integration: The Action Layer ⚙️

After processing, the system needs to communicate its findings:

- I/O (Input/Output): Digital signals sent to PLCs (Programmable Logic Controllers), robots, or other automation equipment to trigger actions (e.g., reject a part, stop the line).

- Data Logging and Reporting: Storing inspection results, images, and statistical data for quality control tracking, process improvement, and compliance.

- Human Machine Interface (HMI): Graphical displays for operators to monitor system status, view images, and make adjustments.

How Machine Vision Technology Works: A Step-by-Step Process

To fully grasp what is machine vision technology, let’s break down its operational workflow:

- Image Acquisition: The process begins when an object enters the camera’s field of view. The lighting illuminates the object, and the camera captures an image, converting light photons into electrical signals (analog or digital).

- Digitization (if needed): For analog cameras, the electrical signal is converted into digital data by the frame grabber. Digital cameras bypass this, sending data directly. This data consists of a grid of pixels, each with a numerical value representing its brightness and color.

- Image Preprocessing: The raw digital image often contains noise or imperfections. Software algorithms are applied to enhance the image, such as filtering to remove noise, adjusting contrast, or correcting for lens distortion. This step makes the subsequent analysis more robust.

- Feature Extraction: Key features relevant to the inspection task are identified. This could involve finding edges, corners, blobs, textures, or specific patterns. For example, in a bottle inspection, the software might look for the bottle’s cap, label, and fill level.

- Image Analysis and Measurement: Using predefined algorithms or AI models, the system performs the specific inspection task. This might include:

- Pattern Matching: Comparing the captured image to a known “gold standard” pattern.

- Counting: Determining the number of objects.

- Localization: Identifying the precise position and orientation of an object.

- Measurement: Calculating dimensions, areas, or volumes.

- Defect Detection: Identifying anomalies, scratches, foreign objects, or incorrect assembly.

- Optical Character Recognition (OCR)/Verification (OCV): Reading and verifying text, batch codes, or serial numbers.

- Decision Making and Output: Based on the analysis, the system makes a decision (e.g., “pass” or “fail,” “correct position,” “identify part number”). This decision is then communicated to other automated systems, triggering an action like diverting a faulty product, guiding a robot arm, or logging data.

Applications of Machine Vision Technology in 2026

The versatility of machine vision technology makes it a cornerstone of Industry 4.0, transforming operations across a vast array of sectors. In 2026, its applications continue to expand and deepen.

| Industry | Common Machine Vision Applications | Benefits |

|---|---|---|

| Manufacturing & Automation |

|

Increased throughput, superior quality control, reduced rework, improved safety. |

| Automotive |

|

Enhanced safety standards, precision manufacturing, defect reduction in complex components. |

| Pharmaceuticals & Medical Devices |

|

Ensuring patient safety, regulatory compliance, preventing counterfeits, high-speed inspection. |

| Food & Beverage |

|

Food safety assurance, waste reduction, brand protection, consistency in product presentation. |

| Electronics |

|

Miniaturization support, extreme precision for tiny components, yield improvement. |

| Logistics & Packaging |

|

Increased sorting speed, reduced errors, improved traceability, inventory management. |

Key Benefits of Machine Vision Technology

Implementing machine vision systems brings a multitude of advantages that directly impact profitability and operational excellence:

- ✅ Superior Accuracy and Consistency: Eliminates human error and subjectivity, performing repetitive tasks with unwavering precision 24/7.

- ⚡ Increased Speed and Throughput: Processes thousands of items per minute, far exceeding human capabilities, leading to higher production rates.

- 💰 Cost Reduction: Minimizes scrap, rework, and warranty claims by catching defects early. Reduces labor costs associated with manual inspection.

- 📈 Enhanced Quality Control: Ensures every product meets stringent quality standards, improving brand reputation and customer satisfaction.

- 📊 Data Collection and Analysis: Provides valuable data on production quality, enabling continuous process improvement and predictive maintenance.

- 🛡️ Improved Safety: Automates inspection in hazardous or ergonomically challenging environments, protecting human workers.

- 📏 Precise Measurement: Performs non-contact dimensional measurements with micrometer-level accuracy, crucial for tight tolerances.

Challenges and Considerations in Machine Vision Implementation

While highly beneficial, implementing machine vision is not without its challenges. Understanding these ensures a smoother deployment:

- Initial Investment: The upfront cost of hardware (cameras, lenses, lighting) and sophisticated software can be significant.

- Complexity of Setup: Achieving optimal performance requires expertise in optics, lighting, and algorithm development. Poor lighting or camera choice can render the system ineffective.

- Environmental Factors: Dust, vibrations, temperature fluctuations, and inconsistent ambient lighting can affect system performance.

- Variety in Objects: Highly variable object appearances, reflections, or complex geometries can be challenging for traditional rule-based algorithms.

- Integration with Existing Systems: Seamlessly integrating a vision system with PLCs, robots, and factory MES (Manufacturing Execution Systems) requires careful planning.

- Maintenance and Calibration: Systems require periodic maintenance, cleaning, and recalibration to ensure continued accuracy.

The Future of Machine Vision Technology in 2026 and Beyond

The landscape of machine vision is continuously evolving, with exciting advancements shaping its future. In 2026, several trends are particularly prominent:

1. AI and Deep Learning Integration: The Game Changer

This is perhaps the most significant trend. Traditional machine vision relies on rule-based algorithms programmed by humans. AI and deep learning allow systems to learn patterns and make decisions from vast amounts of image data. This leads to:

- Enhanced Defect Detection: Identifying subtle, previously undetectable defects or anomalies without explicit programming.

- Greater Flexibility: Adapting to variations in product appearance, lighting, or orientation, reducing the need for rigid setup.

- Complex Classification: Accurately classifying objects based on subjective criteria, like texture or aesthetic appeal.

- Reduced Programming Effort: Shifting from manual algorithm development to training neural networks with example data.

2. 3D Vision for Advanced Applications

While 2D vision is excellent for many tasks, 3D vision, using technologies like structured light, stereo vision, and time-of-flight, is becoming more prevalent. This enables:

- Precise Volume and Shape Measurement: Crucial for packaging, quality control of complex parts.

- Robot Guidance in Complex Environments: Allowing robots to pick objects from bins (bin picking) or navigate in unstructured spaces.

- Surface Inspection of Highly Reflective or Textured Objects: Where 2D contrast is insufficient.

3. Edge Computing and Embedded Vision

Processing images closer to the source (at the “edge” of the network) reduces latency and bandwidth requirements. This trend involves:

- Smart Cameras with Onboard AI: Performing inspection and analysis directly at the camera level, making systems faster and more compact.

- Decentralized Processing: Distributing vision tasks across multiple devices, improving scalability and reliability.

4. Hyperspectral and Multispectral Imaging

Moving beyond the visible light spectrum, these technologies capture data across many narrow wavelength bands, revealing information invisible to the human eye. This is revolutionary for:

- Food Quality and Safety: Detecting ripeness, spoilage, or contaminants in food.

- Material Sorting: Differentiating plastics or other materials by their chemical composition.

- Medical Diagnostics: Analyzing tissue properties.

5. User-Friendly Interfaces and Low-Code/No-Code Solutions

As machine vision matures, there’s a drive to make it more accessible to a broader range of users. Intuitive graphical interfaces and drag-and-drop programming environments are simplifying the deployment and maintenance of vision systems, reducing reliance on highly specialized programmers.

How to Implement a Basic Machine Vision System

Implementing a machine vision system requires a structured approach. Here’s a simplified How-To guide:

Step 1: Define the Inspection Goal Clearly

�

�

Before selecting any hardware, precisely articulate what problem the machine vision system needs to solve. Is it detecting a scratch, measuring a dimension, reading a barcode, verifying assembly, or something else? What are the success criteria, accuracy requirements, and speed demands?

“A well-defined goal is the cornerstone of a successful machine vision deployment. Without it, you’re just capturing images.”

Step 2: Select Appropriate Components (Camera, Lens, Illumination) 📸

- Camera: Choose based on resolution (number of pixels), frame rate (speed), and interface (GigE, USB3, CameraLink).

- Lens: Select focal length to achieve desired Field of View (FOV) and working distance. Consider telecentric lenses for precise measurement without perspective distortion.

- Illumination: This is critical. Experiment with different lighting types (e.g., ring light, backlight, diffused dome) and colors (e.g., red, blue, white) to achieve maximum contrast for the features you need to inspect.

Step 3: Set Up Hardware and Calibrate

🛠

️

Mount the camera, lens, and lighting securely. Ensure stability. Connect the camera to your vision processor (e.g., industrial PC or smart camera). If precise measurements are needed, perform a camera calibration using a known calibration grid to correct for lens distortion and map pixel units to real-world units.

Step 4: Develop/Configure Software Algorithms 🧩

Install your chosen machine vision software (e.g., Cognex VisionPro, Halcon, or open-source libraries like OpenCV). Use the software tools to:

- Acquire Images: Ensure consistent image capture.

- Preprocess: Apply filters (e.g., blur, sharpen) to enhance features and reduce noise.

- Locate Features: Use tools like pattern matching to find specific parts or features.

- Inspect/Measure: Apply tools for edge detection, blob analysis, OCR, or dimensional measurements.

- Set Thresholds: Define pass/fail criteria.

For AI-driven systems, this step involves collecting and annotating a diverse dataset of images, then training a deep learning model.

Step 5: Integrate and Test Thoroughly 🧪

Connect the vision system’s outputs (e.g., pass/fail signals) to your production line’s control system (PLC, robot). Conduct extensive testing with a variety of known good and known bad parts. Iterate on lighting, camera settings, and software algorithms until the desired accuracy and reliability are consistently achieved.

Frequently Asked Questions about Machine Vision Technology (FAQ)

Machine vision technology is crucial in manufacturing (quality control, assembly verification), automotive (robot guidance, defect detection), pharmaceuticals (packaging inspection, counterfeit detection), food and beverage (sorting, foreign object detection), electronics (component inspection, alignment), and logistics (barcode reading, package sorting).

In 2026, AI, particularly deep learning, significantly enhances machine vision by enabling systems to learn and adapt to complex visual patterns, improving accuracy in defect detection, object recognition, and classification. This reduces the need for extensive manual programming and allows for more robust and flexible inspection systems, even in dynamic environments.

A typical machine vision system consists of several core components: an image acquisition device (camera), optics (lenses), illumination (lights), a frame grabber (for analog cameras, though often integrated into digital cameras), a processor (industrial PC or embedded system), and specialized software for image processing and analysis.

Implementing machine vision technology offers numerous benefits, including increased accuracy and consistency in inspection, enhanced product quality, reduced manufacturing costs due to fewer defects and less rework, improved production throughput, enhanced safety by automating hazardous tasks, and the ability to collect valuable data for process optimization.

References

- [1] National Instruments. “What Is Machine Vision?” Accessed October 26, 2026. ni.com

- [2] Automated Imaging Association (AIA). “Machine Vision Basics.” Accessed October 26, 2026. automate.org

- [3] Cognex Corporation. “Machine Vision Explained.” Accessed October 26, 2026. cognex.com

Conclusion: Embracing the Visionary Future in 2026

As we’ve explored, what is machine vision technology is far more than just cameras and computers; it’s a sophisticated system that grants machines the power of sight, transforming industrial processes and elevating quality standards. From the precision of defect detection in semiconductors to the swift sorting of produce, its impact is undeniable and growing.

In 2026, with the pervasive integration of AI and deep learning, machine vision systems are becoming more intelligent, adaptable, and easier to deploy than ever before. This evolution is enabling manufacturers to tackle previously insurmountable inspection challenges, drive down costs, increase efficiency, and usher in an era of unparalleled product quality and operational excellence.

For businesses looking to stay competitive, investing in and understanding machine vision is paramount. It’s not merely an automation tool; it’s a strategic asset that provides critical insights, ensures consistency, and unlocks new possibilities for innovation. The future of manufacturing is visual, and machine vision is the lens through which that future is clearly focused.

Actionable Next Steps:

- Assess Your Needs: Identify specific areas in your operations where visual inspection or measurement is critical, prone to human error, or a bottleneck.

- Consult Experts: Engage with machine vision integrators or consultants to evaluate the feasibility and potential ROI for your specific applications.

- Start Small: Consider implementing a pilot project on a less critical but impactful application to gain experience and demonstrate value.

- Invest in Training: Equip your team with the knowledge to operate and maintain these advanced systems, especially given the rise of AI-driven solutions.

- Stay Updated: Continuously monitor advancements in AI, 3D vision, and new sensor technologies to leverage emerging capabilities in 2026 and beyond.